How to Research B2B Leads at Scale Without Scraping or Automation

Most advice about scaling B2B lead research pushes teams toward automation as quickly as possible. Scraping tools, crawlers, and bulk list generators promise speed by removing humans from the process entirely.

That approach looks efficient early on. At scale, it usually breaks.

What teams actually need isn’t “more leads faster.” It’s a way to research a lot of leads without creating downstream waste — without flooding pipelines with low-signal data, without breaking workflows, and without relying on brittle automation that collapses the moment platforms change.

There is a middle ground. And it’s where most effective B2B research actually happens.

Why Lead Research Breaks When Volume Increases

When teams first try to scale lead research, they usually run into the same wall.

Manually researching businesses doesn’t feel scalable. Copying names, URLs, contact details, and notes across tabs and spreadsheets slows everything down. So the temptation is obvious: automate the collection.

The problem is that automation optimizes for collection speed, not decision quality.

As volume increases, context disappears. Leads arrive detached from the platform they came from, stripped of the signals that made them interesting in the first place. Sales teams inherit the mess — spending time disqualifying leads that never should have entered the pipeline.

At small volumes, that inefficiency is tolerable.

At scale, it becomes the dominant cost.

The Bottleneck Isn’t Finding Businesses — It’s Deciding Which Ones Matter

Most B2B teams are not starved for places to find leads.

Between search engines, professional networks, directories, marketplaces, and review platforms, there is no shortage of businesses to look at. The real constraint is deciding — quickly and repeatedly — which of those businesses are worth contacting.

That decision depends on signals:

- Is the business active?

- Does it match the target profile?

- Is there evidence of demand, growth, or dissatisfaction?

- Is it the right moment for outreach?

Automation struggles here because these signals are contextual. They live inside the platforms themselves, not in flat CSV exports.

This is why human judgment doesn’t disappear at scale — it becomes more valuable.

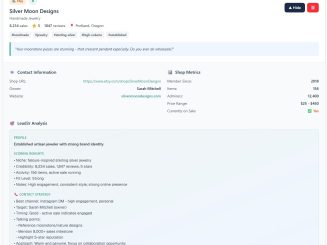

What “Manual Research at Scale” Actually Looks Like

There’s a misconception that manual research is inherently slow.

It isn’t.

What’s slow is the overhead wrapped around it: copy-paste work, reformatting fields, switching tabs, normalizing data after the fact.

At scale, effective manual research looks like this:

A researcher navigates naturally across platforms, evaluates businesses in context, and captures only the leads that pass an initial judgment check. Structure is applied at the moment of research, not hours later during cleanup.

The human still decides what is worth saving.

The system handles how it’s saved.

That distinction matters.

Where High-Signal B2B Data Actually Comes From

Different platforms expose different parts of the picture. Teams that research well at scale don’t rely on a single source — they triangulate.

On Google Maps, businesses reveal operational reality: location, hours, reviews, and local presence. It’s often the fastest way to confirm whether a company is real and active.

https://lead3r.net/platforms/google-maps-lead-extraction/

On LinkedIn Company Pages, the signal shifts. You see organizational structure, employee count, hiring patterns, and positioning. This matters when targeting by role, size, or growth stage.

https://lead3r.net/platforms/linkedin-company-leads/

On review-based platforms, a different signal emerges entirely: reputation, service consistency, and pain points expressed by customers themselves.

https://lead3r.net/platforms/trustpilot-business-leads/

Scaling research isn’t about extracting one platform harder. It’s about being able to compare these signals consistently, without losing context.

Why Scraping Looks Scalable — Until It Isn’t

Scraping tools promise scale by removing judgment from the loop.

That works briefly, then degrades.

As layouts change, protections tighten, and platforms evolve, scrapers break. Data becomes inconsistent. Fields don’t line up across sources. Leads arrive faster than they can be evaluated, shifting work downstream instead of eliminating it.

The hidden cost isn’t technical — it’s operational. Sales teams lose trust in the data. Qualification moves later in the funnel. Throughput drops even as volume rises.

At that point, “more leads” actively hurts performance.

A More Durable Model for Scale

The alternative is not abandoning scale — it’s changing where scale is applied.

Instead of scaling collection indiscriminately, scale the research throughput:

- Let humans choose which businesses are worth examining

- Capture structured data only when that decision is made

- Preserve platform context alongside the lead

- Normalize fields so leads from different sources can be compared immediately

This model keeps judgment at the top of the funnel while still allowing research volume to increase. It removes friction without removing control.

That’s the difference between speed that compounds and speed that collapses.

Scaling Without Replacing Judgment

The teams that research effectively at scale aren’t faster because they automate more. They’re faster because they eliminate waste.

They research more leads per hour by:

- Avoiding copy-paste work

- Reducing tab switching

- Applying structure at the point of discovery

- Disqualifying earlier, not later

Judgment doesn’t slow scale.

Unstructured workflows do.

Keeping Cross-Platform Research Coherent

Once teams move beyond a single source, workflow design matters more than tool choice.

Without consistent structure, cross-platform research becomes fragmented. Leads can’t be compared. Context gets lost. Scale evaporates.

That’s why serious research workflows focus on keeping multiple platforms inside a single, coherent system.

Scale isn’t about doing more things. It’s about doing the same things without friction.

Final Thoughts

Researching B2B leads at scale doesn’t require scraping, crawling, or automated browsing.

It requires:

- Human judgment at the top of the funnel

- Platforms that expose real signals

- Structure applied at the moment of research

- Workflows designed to reduce waste, not just effort

When those pieces are in place, scale stops being fragile.

You don’t get there by removing people from the process.

You get there by removing everything that slows them down.

Extract Your First Qualified Lead

Takes seconds. No credit card required.

No credit card required

Latest Articles

Stay up to date with practical guides on extracting leads, qualifying prospects, and sending smarter outreach. Every post is written for freelancers, creators, and operators who want faster, clearer prospecting workflows.