How to Research B2B Leads at Scale Without Scraping or Automation

Scaling B2B lead research is usually framed as a choice between two bad options:

either spend hours manually copying data, or rely on scraping and automation that produces noisy, unreliable results.

In practice, neither approach works well once volume matters.

The reality is that many teams need to research a lot of leads, quickly, without creating risk, breaking workflows, or flooding sales pipelines with low-signal data.

This article explains how B2B teams actually research leads at scale — without scraping or automated browsing — and why that approach produces better outcomes over time.

Why Most “Scalable” Lead Research Breaks Down

When teams try to scale lead research, the usual solution is automation.

Scrapers, bots, and bulk list tools promise speed by removing humans from the process entirely. On paper, this looks efficient. In practice, it introduces several problems:

- Data is collected without context

- Listings are outdated or duplicated

- Qualification signals are lost

- Platforms change and tools break

- Sales teams inherit cleanup work

The result is often more leads, but less usable output per hour.

At scale, that inefficiency compounds.

The Real Bottleneck Isn’t Volume — It’s Signal

Most B2B research workflows don’t fail because they can’t find enough businesses.

They fail because:

- Too many leads require follow-up just to disqualify

- Data from different platforms isn’t comparable

- Research effort doesn’t translate cleanly into outreach

That’s why simply increasing volume rarely improves revenue.

What actually scales is signal extraction — the ability to quickly understand whether a business is worth contacting before outreach begins.

This is where manual research still matters.

What “Manual Research at Scale” Actually Means

Manual research doesn’t mean slow or unstructured.

At scale, it means:

- A human chooses which businesses are worth examining

- Research happens directly on the platforms where signals exist

- Data is captured intentionally, not indiscriminately

- Structure is applied at the moment of research

The human judgment stays in place.

The friction around it is removed.

This approach avoids the core failure mode of automation: collecting data faster than it can be evaluated.

Where B2B Research Signals Actually Live

Different platforms expose different signals. Teams that research effectively at scale use more than one source.

For example:

- Google Maps business listings reveal operational reality: location, activity, reviews, and local presence

- → https://lead3r.net/platforms/google-maps-lead-extraction/

- LinkedIn Company Pages show organizational structure, size, hiring patterns, and positioning

- → https://lead3r.net/platforms/linkedin-company-leads/

- Review-based platforms surface reputation, service consistency, and customer sentiment

- → https://lead3r.net/platforms/trustpilot-business-leads/

Each platform answers a different question.

Scaling research means being able to compare those answers consistently, not scrape one source aggressively.

Why Scraping Fails as Volume Increases

Scraping tools optimize for collection speed, not research quality.

As volume grows, scraping tends to:

- Strip away platform-specific context

- Produce inconsistent fields across sources

- Generate false positives that must be filtered later

- Break when layouts or protections change

That creates downstream cost: sales teams spend time qualifying data that should never have entered the pipeline.

At scale, that waste matters more than raw lead count.

This is why many teams eventually move away from scraping-heavy workflows, even if they worked briefly early on.

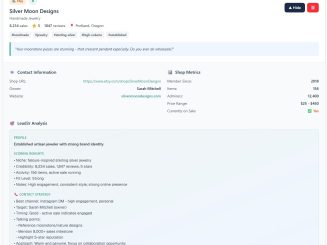

A Better Model: Human-Triggered, Structured Research

The alternative isn’t going back to spreadsheets.

Modern manual research at scale works when:

- The researcher navigates naturally across platforms

- Data is captured only when a lead is worth saving

- Extracted data is normalized into consistent fields

- Platform context is preserved alongside the lead

This model keeps humans in control of judgment, while allowing volume to increase without creating chaos.

It’s the middle ground between slow manual work and brittle automation.

Scaling Without Replacing Judgment

One of the most common mistakes teams make is trying to remove judgment from lead research.

In reality, judgment is the competitive advantage.

The teams that scale effectively are the ones that:

- Research more leads per hour

- Eliminate copy-paste and reformatting work

- Preserve context across platforms

- Decide faster which leads are worth outreach

That’s how scale actually shows up — not as “more data”, but as more usable output from the same effort.

Using Multiple Platforms Without Creating Overhead

Cross-platform research only works if the workflow stays simple.

When teams jump between platforms without structure, scale disappears. Data becomes fragmented and comparisons break down.

The solution is not fewer platforms — it’s consistent structure applied at the moment of research.

For teams researching across multiple sources, it’s important to know which platforms can be integrated into a single workflow:

Final Thoughts

Researching B2B leads at scale doesn’t require scraping, crawling, or automated browsing.

It requires:

- Human judgment

- Cross-platform awareness

- Structured data capture

- A workflow designed to reduce waste

When those elements are in place, teams can research large volumes of leads without sacrificing quality or control.

Scale doesn’t come from removing people from the process.

It comes from removing friction.

Turn Any Business Into a Qualified Lead in Seconds

Stop wasting time opening tabs, copying data, and guessing who is worth contacting.

Install Lead3r and extract fully enriched profiles in one click.

No credit card required

Latest Articles

Stay up to date with practical guides on extracting leads, qualifying prospects, and sending smarter outreach. Every post is written for freelancers, creators, and operators who want faster, clearer prospecting workflows.